My Projects

My projects

Mittleider Weekly-Feed Mix Calculator

I love gardening. After months of studying how to grow plants the right way, I have created a calculator which uses mathematical optimization (linear programming) to make sure my mix of fertilizers always fits recommendations from experts in plant nutrition and contains everything necessary for plant's healthy growth.

With this calculator, you can quickly create a fully balanced Dr. Jacob Mittleider`s Weekly-Feed mix using virtually any sorts of fertilizers locally available to you.

The extra benefit of this tool is that it can guide the gardener step-by-step through the process of creating the mix by pointing on problems with the existing recipe.

Rustextile

Despite Markdown becoming de-facto standard for markup in comments and some blogs, it has quite limited expressiveness when it comes to creating rich online publications.

Textile, on the contrary, was created with CMS and complex publications in mind. For instance, it makes it possible to create out-of-the-ordinary content blocks without the need to use HTML. Personally, I used it quite a lot in my practice, and when the need arose I ported PHP-Textile parser to Rust. The port is not beautiful since I was trying to preserve the original code structure as much as possible to simplify porting of new features, but it's functional :)

Trade-archivist

While I was fiddling with CNNs and Transformers for text processing, I decided to try them on time series predictions, like stock and cryptocurrency values.

So I wrote a tool that could

- Collects realtime logs of all trading events from a set of cryptocurrency exchanges and stores them in a binary format as a series of Bzip achives. The events come through WebSocket connections to the respective exchange's API.

- Acts as a sever that provides a binary API for re-transmitting the trading events as they happen, and also for requesting arbitrary slices of the trading history (up to now). This can used for backtesting, training of various ML algorithms or streaming of the same realtime logs from the exchanges (in which case the server acts as a proxy with a unified API to all exchanges).

This have not made me rich, but helped to realize that WaveNet is goot not only for natural speech processing :)

Keras-transformer

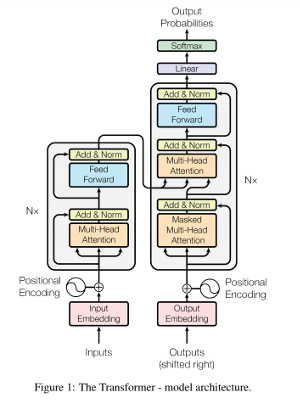

Keras-transformer is a library implementing nuts and bolts for building (Universal) Transformer models using Keras. It allows you to assemble a multi-step Transformer model in a flexible way.

The library supports positional encoding and embeddings, attention masking, memory-compressed attention, ACT (adaptive computation time). All pieces of the model (like self-attention, activation function, layer normalization) are available as Keras layers, so, if necessary, you can build your version of Transformer, by re-arranging them differently or replacing some of them.

For those who don't know, The (Universal) Transformer is a deep learning architecture described in arguably one of the most impressive DL papers of 2017 and 2018: Attention is All you need and "Universal Transformers" by Google Brain team.

Their authors brought the idea of recurrent multi-head self-attention, which has inspired a big wave of new research models that keep coming ever since, demonstrating new state-of-the-art results in many Natural Language Processing tasks, including translation, parsing, question answering, and even algorithmic tasks.

Unlike classical recurrent neural networks, Transformer trains much faster measured both as the time per epoch and the wall clock time. It's also capable of efficiently handling multiple long-term dependencies in texts.

When applied to text generation, Transformer creates more coherent stories, which don't degrade in quality with the growth of their length, as it is typically the case with the recurrent networks.

KERL

KERL is a collection of various Reinforcement Learning algorithms and related techniques implemented purely using Keras.

The goal of the project is to create implementations of state-of-the-art RL algorithms as well as a platform for developing and testing new ones, yet keep the code simple and portable thanks to Keras and its ability to use various backends. This makes KERL very similar to OpenAI Baselines, only with focus on Keras.

What works in KERL:

- Advantage Actor-Critic (A2C) [original paper]

- Proximal Policy Optimization (PPO) [original paper]

- World Models (WM) [original paper]

All algorithms support adaptive normalization of returns Pop-Art, described in DeepMind's paper "Learning values across many orders of magnitude". This greatly simplifies the training, often making it possible to just throw the algorithm at a task and get a decent result.

With KERL you can quickly train various agents to play Atari games from pixels and dive into details of their implementation. Here's an example of such agent trained with KERL (youtube video): Deep RL A2C Agent Playing Ms. Pacman

Limitations: Currently KERL does not support continuous control tasks and so far was tested only on various Atari games supported by The Arcade Learning Environment via OpenAI Gym.

Avalanche

Avalanche is a simple deep learning framework written in C++ and Python. Unlike the majority of the existing tools it is based on OpenCL, an open computing standard. This allows Avalanche to work on pretty much any GPU, including the ones made by Intel and AMD, even quite old models.

The project was created as an attempt to better understand how modern deep learning frameworks like TensorFlow do their job and to practice programming GPUs. Avalanche is based on a computational graph model.

It supports automatic differentiation, broadcasted operations, automatic memory management, can utilize multiple GPUs if needed.

The framework also works a backend for Keras, so if you know Keras, you can begin to use Avalanche without the need to learn anything about it.

CartGP

CartGP is a very simple and minimalistic C++/Python library implementing Cartesian Genetic Programming (CGP). The library currently supports classic form of CGP where nodes are arranged into a grid and no recurrent connections are allowed.

Check this jupyter notebook to see how to use the library from Python.