Kirill Mavreshko

Welcome, {{ visitor.name }}, to my personal page!About me

A software developer for about 18 years , and counting.

Mostly was busy in projects handling high volumes of data, often in real time, and then doing something clever with them :-) I love writing smart software and not afraid to dig into the science of things for that.

Was working remotely for many years. Had to learn to be disciplined and organized since my pay was directly connected to quality and overall results of my work.

Also, I value my passion to a particular project much higher than the pay.

My skills

- Programming languages (interspersedly):

- Rust — Worked with Tokio, Actix-web, HuggingFace Candle, PyO3, Yew, Sea-ORM during my time in MTS Digital and while implementing Mealmind.

- C++ — Coded with Boost (mostly). Developed various processing and analytical tools for large volumes of data (ad traffic), some with Python bindings.

- Python — Web backends (a lot of Django) and data processing tools. Extensively used async to link multiple remote services.

- C — High-traffic distributed morphology analysis of for internet ads (with ZeroMQ).

- JavaScript / TypeScript Mostly developed prototypes using Angular.

- in a long forgotten past worked on projects in C#, Java, Perl, PHP. Also familiar with Haskell and Lisp.

- English CEFR Level C1 (Advanced). Worked with international teams.

- Databases: PostgreSQL, CassandraDB, occasionally MongoDB and MySQL.

- OS: Linux user since 2000 (currently Debian/Ubuntu).

- DevOps: Decent Ansible, Docker, Kubernetes (managed a baremetal cluster), Jenkins, CI + Docker registry on GitLab CE.

- Web stack: Know enough HTML + CSS to build simple prototypes and cooperate with more specialized team members.

- Software Design: Walked the path from raw ideas to creating goals, user stories, and working prototypes. Love automated testing (without religious zeal or blind chasing after coverage), and pleasing whoever has to read my code after me.

- Machine Learning: Deep understanding of modern neural network architectures and frameworks, the way they work and trained. Especially for NLP. Have experience coding language models purely in Rust and coding for GPUs using OpenCL.

- Organisational: Worked remotely for many years, part of the time as as freelancer. I believe this made me more responsible and self-organized.

- Electronics (a hobby): Embedded development (Rust/C++) of IoT and other devices based on ARM (STM32) and Espressif (ESP8266, ESP32) microcontrollers, communicating through MQTT and HTTP.

Experience

Tech Lead

Was reponsible for planning and coordination of development of various AI components, in addition to some experimental research in the area of large language models (LLMs) with their own memory. Also trained the models, evaluated their capabilities and worked on their integration with other services through APIs.

Lead Rust developer

Developed Rust backend using Actix, for a system which stores and controls access to personal data. Spent some time working on integration with "smart" devices via MQTT protocol. Also was reponsible for automated testing and auto-generated documentation for the APIs.

Software Developer

Mealmind my own project

When you're a software developer, you get paid for what you're good at right now. This creates a vicious cycle when you get more and more specialized knowledge which makes your employer happy but makes it more and more difficult for you to turn to anything new.

This is why in 2017 I left my last cushy job and decided to spend some time expanding my skills in new directions that interested me.

Mealmind has become a polygon for my work to catch up with new IT technologies in multiple areas, including:

I improved my Rust, which I used for data processing (tokio, SeaORM), and for backend and frontend as well. Eventually replaced many parts written in JavaScript and Python with Yew and Actix.

Did a lot of Deep Learning (neural networks) in general. Had a chance to work with multiple neural network architectures:

- Various LSTMs,

- CNNs, like WaveNet (in application to time series), Variational Autoencoders for image processing

- Transformers for Natural Language Processing, like GPT, BERT, and their derivatives, including long-attention varieties like Reformer and Nyströmformer.

- Deeply dived in Reinforcement Learning and self-supervised learning.

In the process I had to do a lot of hyperparameter tuning, regularization and optimization, learn how to structure such ML projects and use pre-training to save on training efforts.

Got used to reading, dissecting and reproducing complex research papers (like in the field of Reinforcement Learning and NLP, optimisation theory).

Learned GPU-accelerated computing with OpenCL, including writing custom ML kernels.

Got experience in deployment with containers, their management and orchestration, using Docker, Kubernetes and Ansible.

Although not new to monitoring, this time I had to build it myself, using Eclipse Mosquitto, Grafana and Telegraf.

Sadly, due to the... let's say, global political and market changes, I had to abandon the project. Still, I'm grateful for the broad experience it gave to me.

Senior Software Developer

IPONWEB BIDSWITCH

IPONWEB specialises in programmatic and real-time advertising technology and infrastructure. One of its divisions, BidSwitch was created to help solve many of the underlying technical challenges and inefficiencies that hamper platform interconnectivity and trading at the infrastructure level.

Originally I started as a web frontend and backend developer using Python, Django, and Angular.

During my time there, I helped to start several internal projects that later grew into new products or parts of them: most notably BidSwitch UI, internal financial reporting, ad traffic forecasting, automatic creative approval, some APIs for clients.

Last years in the company I spent primarily focused on high-load backend projects, related to processing and analyzing large amounts of data and communicating with other services. During this time I used C++ and Python, had extensive experience with PostgreSQL and non-relational clusters of Cassandra and MongoDB, as well as many critical parts of modern IT infrastructure, including automatic monitoring, testing, real-time error reporting, continuous integration and delivery.

Senior Software Developer (as a freelancer)

Designed and implemented a whole online market of actors playing the role of Santa (Ded Moroz in Russia) and providing adjacent services. Integrated the project with online payment system QIWI. Technologies: Python, Django, JavaScript, HTML, CSS, PostgreSQL.

Senior Software Developer (as a freelancer)

Smart Links is an marketing and advertising company. There I was responsible for

- Design and implementation of high-performance distributed morphology system for Russian and Ukrainian languages. Due to flexibility of these languages, naive word matching and search becomes impossible. Morphological system relies on a complex tree of language rules and dictionaries to convert words to their infinitive forms in real time.

- Design and implementation of a distributed HTML content analyzer for advertisement system, along with Python bindings for some parts of it.

The system was written in C and relied on ZeroMQ to provide connectivity. It was capable of processing hundreds of documents (HTML pages) per second in Russian or Ukrainian languages on a typical home PC, normalizing texts and identifying key parts that could be targeted with ads.

{% dated_content( title="Senior Software Developer (as a freelancer)", lead="Artela.ru (startup)", date="February 2010 - November 2010" tech="Python, Django, JavaScript, HTML, CSS, PostgreSQL", ) %} Was responsible for design and implementation of an architecture for a universal store of digital services like VoIP telephony, web domains, hosting.

This was my first commercial project where I could apply insights I learned from Alan Cooper's book "About Face" and other similar publications. I got to practice writing user stories and design the whole structure of user interface.

Integrated the store with several online payment systems: Webmoney, Yandex Money and Paypal. {% end %}

{% dated_content( title="Senior Software Developer (as a contractor)", lead="Gzt.ru news agency", date="February 2009 - September 2009", tech="Python, Django, JavaScript, MySQL, PostgreSQL, Nginx, HTML, CSS, SVN, Trac", ) %} Design and development of both the backend and partially the frontend (heavily customized Django admin CMS) parts of the portal.

Due to high amounts of trafic and very dynamic nature of the content, the project required a carefully crafted data model, complex fine-tuned SQL queries, and various caches at different levels. {% end %}

Software Developer Co-founder

Domik63.ru Real Estate Information Portal

Was responsible for the development of both the backend and the frontend parts of the portal, and then for its maintenance & support.

Integrated portal's database with multiple real estate agencies by providing tools for regular updates of information through regular spreadsheets.

Technologies: Python, Django, PostgreSQL.

Lead Software Developer

Inter-M, Web Design Studio

Backend Web developer. Technologies: Python, Django, PostgreSQL, Linux, Mercurial.

Senior Software Developer

Unkom, Web Application Development Company

Originally came as a backend web developer, later transitioning to PHP and Java. Created a CMS system and a forum engine used by the company as a base for multiple websites for businesses and government organizations. Notably, created backend for the main (MuzTV)[https://muz-tv.ru] site and forum.

Main technologies used: Perl, PHP, .NET, Java and MySQL with PostgreSQL. OS: Linux (mostly) / Windows

Formal Education & Courses

There were plenty over the years, so I listed only those for which I have formal documents.

Deep Learning Specialization

- Neural Networks and Deep Learning

- Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

- Structuring Machine Learning Projects

- Convolutional Neural Networks

- Sequence Models

Samara State Aerospace University

4-year Diploma of Incomplete Higher Education

- Probability Theory and Statistics

- Theory of Stochastic Processes

- Linear Algebra and Matrix Computations

- Information Theory

- Data Structures and Algorithms

- Calculus

- Optimization Theory

- Differential Equations

- Discrete Mathematics

- Numerical analysis

- Databases and Expert Systems

- Control Theory

- Parallel Computing

Atyrau Lyceum School No. 17

My projects

Mittleider Weekly-Feed Mix Calculator

I love gardening. After months of studying how to grow plants the right way, I have created a calculator which uses mathematical optimization (linear programming) to make sure my mix of fertilizers always fits recommendations from experts in plant nutrition and contains everything necessary for plant's healthy growth.

With this calculator, you can quickly create a fully balanced Dr. Jacob Mittleider`s Weekly-Feed mix using virtually any sorts of fertilizers locally available to you.

The extra benefit of this tool is that it can guide the gardener step-by-step through the process of creating the mix by pointing on problems with the existing recipe.

Rustextile

Despite Markdown becoming de-facto standard for markup in comments and some blogs, it has quite limited expressiveness when it comes to creating rich online publications.

Textile, on the contrary, was created with CMS and complex publications in mind. For instance, it makes it possible to create out-of-the-ordinary content blocks without the need to use HTML. Personally, I used it quite a lot in my practice, and when the need arose I ported PHP-Textile parser to Rust. The port is not beautiful since I was trying to preserve the original code structure as much as possible to simplify porting of new features, but it's functional :)

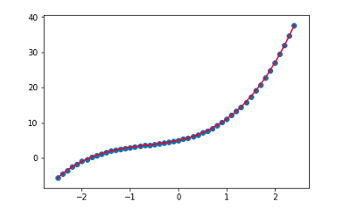

Trade-archivist

While I was fiddling with CNNs and Transformers for text processing, I decided to try them on time series predictions, like stock and cryptocurrency values.

So I wrote a tool that could

- Collects realtime logs of all trading events from a set of cryptocurrency exchanges and stores them in a binary format as a series of Bzip achives. The events come through WebSocket connections to the respective exchange's API.

- Acts as a sever that provides a binary API for re-transmitting the trading events as they happen, and also for requesting arbitrary slices of the trading history (up to now). This can used for backtesting, training of various ML algorithms or streaming of the same realtime logs from the exchanges (in which case the server acts as a proxy with a unified API to all exchanges).

This have not made me rich, but helped to realize that WaveNet is goot not only for natural speech processing :)

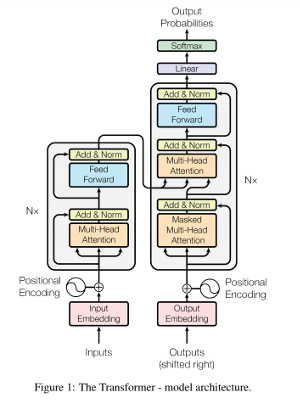

Keras-transformer

Keras-transformer is a library implementing nuts and bolts for building (Universal) Transformer models using Keras. It allows you to assemble a multi-step Transformer model in a flexible way.

The library supports positional encoding and embeddings, attention masking, memory-compressed attention, ACT (adaptive computation time). All pieces of the model (like self-attention, activation function, layer normalization) are available as Keras layers, so, if necessary, you can build your version of Transformer, by re-arranging them differently or replacing some of them.

For those who don't know, The (Universal) Transformer is a deep learning architecture described in arguably one of the most impressive DL papers of 2017 and 2018: Attention is All you need and "Universal Transformers" by Google Brain team.

Their authors brought the idea of recurrent multi-head self-attention, which has inspired a big wave of new research models that keep coming ever since, demonstrating new state-of-the-art results in many Natural Language Processing tasks, including translation, parsing, question answering, and even algorithmic tasks.

Unlike classical recurrent neural networks, Transformer trains much faster measured both as the time per epoch and the wall clock time. It's also capable of efficiently handling multiple long-term dependencies in texts.

When applied to text generation, Transformer creates more coherent stories, which don't degrade in quality with the growth of their length, as it is typically the case with the recurrent networks.

KERL

KERL is a collection of various Reinforcement Learning algorithms and related techniques implemented purely using Keras.

The goal of the project is to create implementations of state-of-the-art RL algorithms as well as a platform for developing and testing new ones, yet keep the code simple and portable thanks to Keras and its ability to use various backends. This makes KERL very similar to OpenAI Baselines, only with focus on Keras.

What works in KERL:

- Advantage Actor-Critic (A2C) [original paper]

- Proximal Policy Optimization (PPO) [original paper]

- World Models (WM) [original paper]

All algorithms support adaptive normalization of returns Pop-Art, described in DeepMind's paper "Learning values across many orders of magnitude". This greatly simplifies the training, often making it possible to just throw the algorithm at a task and get a decent result.

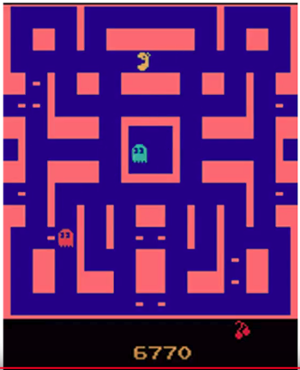

With KERL you can quickly train various agents to play Atari games from pixels and dive into details of their implementation. Here's an example of such agent trained with KERL (youtube video): Deep RL A2C Agent Playing Ms. Pacman

Limitations: Currently KERL does not support continuous control tasks and so far was tested only on various Atari games supported by The Arcade Learning Environment via OpenAI Gym.

Avalanche

Avalanche is a simple deep learning framework written in C++ and Python. Unlike the majority of the existing tools it is based on OpenCL, an open computing standard. This allows Avalanche to work on pretty much any GPU, including the ones made by Intel and AMD, even quite old models.

The project was created as an attempt to better understand how modern deep learning frameworks like TensorFlow do their job and to practice programming GPUs. Avalanche is based on a computational graph model.

It supports automatic differentiation, broadcasted operations, automatic memory management, can utilize multiple GPUs if needed.

The framework also works a backend for Keras, so if you know Keras, you can begin to use Avalanche without the need to learn anything about it.

CartGP

CartGP is a very simple and minimalistic C++/Python library implementing Cartesian Genetic Programming (CGP). The library currently supports classic form of CGP where nodes are arranged into a grid and no recurrent connections are allowed.

Check this jupyter notebook to see how to use the library from Python.

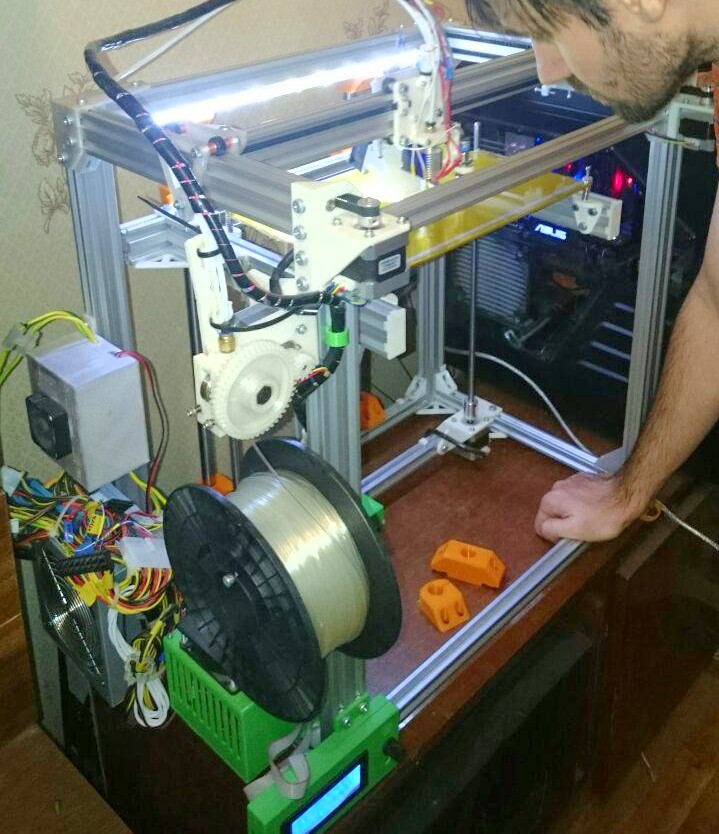

Interests

Apart from being a software developer and AI enthusiast, I enjoy running bicycling, modeling devices in FreeCAD and printing them with my own DIY 3D printer.

I am also familiar with electronics at a level where I can design and build my own a few-hundred watts power supply entirely from scratch, or an MCU-based digital sensor.

Finally, I love plants and gardening with my family. I even have a blog entirely dedicated to this activity.